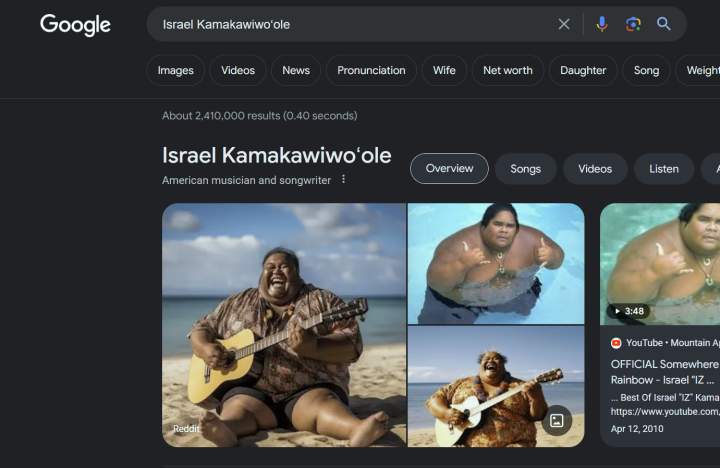

Right now, if you type “Israel Kamakawiwoʻole” into Google search, you don’t see one of the singer’s famous album covers, or an image of him performing one of his songs on his iconic ukulele. What you see first is an image of a man sitting on a beach with a smile on his face — but not a photo of the man himself taken with a camera. This is fake photo generated by AI. In fact, when you click on the image, it takes you to the Midjourney subreddit, where the series of images were initially posted.

I saw this first posted by Ethan Mollick on X (formerly known as Twitter), a professor at Wharton who is studying AI.

Looking at the photo up close, it’s not hard to see all the traces of AI left behind in it. The fake depth of field effect is applied unevenly, the texture on his shirt is garbled, and of course, he’s missing a finger on his left hand. But none of that is surprising. As good as AI-generated images have become over the past year, they’re still pretty easy to spot when you look closely.

The real problem, though, is that these images are showing up as the first result for a famous, known figure without any watermarks or indications that it is AI-generated. Google has never guaranteed the authenticity of the results of its image search, but there’s something that feels very troubling about this.

Now, there are some possible explanations for why this happened in this particular case. The Hawaiian singer, commonly known as Iz, passed away in 1997 — and Google always wants to feed the latest information to users. But given that not a lot of new articles or discussion is happening about Iz since then, it’s not hard to see why the algorithm picked this up. And while it doesn’t feel particularly consequential for Iz — it’s not hard to imagine some examples that would be much more problematic.

Even if we don’t continue see this happen at scale in search results, it’s a prime example of why Google needs to have rules around this. At the very least, it seems like AI-generated images should be marked clearly in some way before things get out of hand. If nothing else, at least give us a way to automatically filter out AI images. Given Google’s own interest in AI-generated content, however, there are reasons to think it may want to find ways to sneak AI-created content into its results, and not clearly mark it.

Editors' Recommendations

- The most common Google Meet problems and how to fix them

- How to generate AI art right in Google Search

- Google might finally have an answer to Chat GPT-4

- Google witness accidentally reveals how much Apple gets for Safari search

- Google tackles scammers offering malware-laden ‘Bard’ tool